Bridge Report:(4388)AI the Second Quarter of March 2020

Representative Director Daisuke Yoshida | AI, Inc. (4388) |

|

Corporate Information

Exchange | TSE Mothers |

Industry | Information and communications |

Representative Director | Daisuke Yoshida |

Address | KDX Kasuga Building 10F, 1-15-15 Nishikata, Bunkyo Ward, Tokyo |

Year-end | March |

URL |

Stock Information

Share Price | Shares Outstanding | Total Market Cap | ROE (Actual) | Trading Unit | |

¥1,782 | 5,123,000 shares | ¥9,129million | 16.7% | 100shares | |

DPS (Estimate) | Dividend Yield (Estimate) | EPS (Estimate) | PER (Estimate) | BPS (Actual) | PBR (Actual) |

6.00 | 0.3% | ¥32.84 | 54.3x | ¥219.14 | 8.1x |

*The share price is the closing price on November 29. The number of shares outstanding, DPS and EPS are taken from the brief financial report for the first half of the FY March 2020.

*ROE and BPS are the results of the previous term.

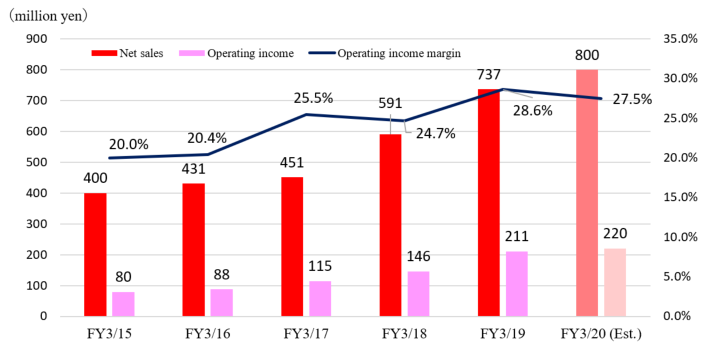

Earnings Trends

Fiscal Year | Net Sales | Operating Income | Ordinary Income | Net Income | EPS | DPS |

March 2017 (Actual) | 451 | 115 | 116 | 76 | 19.57 | 0.00 |

March 2018 (Actual) | 591 | 146 | 147 | 109 | 24.73 | 0.00 |

March 2019 (Actual) | 737 | 211 | 202 | 150 | 30.84 | 8.00 |

March 2020 (Estimate) | 800 | 220 | 220 | 160 | 32.84 | 6.00 |

* Unit: Million yen, yen. The estimated values are provided by the company.

*DPS ¥8.00 for FY 2019 includes ¥3.00 commemorative dividend.

This report presents AI, Inc.’s earning results for the second quarter of March, 2020.

Table of Contents

Key Points

1. Company Overview

2. The Second Quarter of March 2020 Earning Results

3. Fiscal Year March 2020 Earnings Estimates

4. Conclusions

<Reference: Regarding Corporate Governance>

Key Points

- AI, Inc. offers a speech synthesis engine and solutions regarding speech synthesis. The company provides corporations and consumers with products and services based on “AITalk®,” a speech synthesis engine developed by the company, for automatic answering systems, car navigation systems, anti-disaster wireless systems, smartphones, communication robots, in-vehicle devices, and games. The company has unrivaled characteristics and strengths; for example, it can synthesize high-quality speeches from a few voice samples and offer many speakers.

- The sales for the second quarter of the term ended March 2020 were 311 million yen, up 5.6% year-on-year. The sales of products and services provided to corporations grew, while the sales of products to consumers declined. SG&A expenses increased 11.7% year-on-year due to the bolstering of workforce, resulting in a fall in operating income by 8.6% year on year to 63 million yen. Ordinary income increased 11.1% year-on-year to 63 million yen after the elimination of temporary expenses attributable to the listing in the stock market. SG&A expenses were less than the estimate, thanks to postponing recruitment as well as cutting recruitment costs, thus profit was substantially higher than the estimate.

- The full-year earnings forecast is unchanged. It is estimated that sales will grow 8.5% year on year to 800 million yen and operating income will rise 4.2% year on year to 220 million yen. Through the expansion of the speech synthesis market, sales and profit are projected to grow like in the previous term. The dividend amount is expected to increase by 1 yen per share from the last term’s ordinary dividend of 5 yen per share to 6 yen per share. The estimated payout ratio is 18.3%. Incidentally, the breakdown of the 8 yen per share dividend paid in the term ended March 2019 is 5 yen per share as an ordinary dividend and a 3 yen per share as a commemorative dividend.

- We were worried about the delay in releasing the company’s original products for Nuance Communications, which was scheduled in June 2019, however, the Nuance Communications’ automotive department became independent and was newly established as Cerence Inc., to which the agreement was transferred. The very existence of automobiles is expected to change along with concepts such as C.A.S.E., or Connectivity, Autonomous, Sharing/Subscription, and Electrification; it is also expected that the functionality of speech synthesis engines will not stop at the conventional car navigation and demand for it will rapidly increase. We are paying attention to the company’s business expansion in the medium to long term as they started commercializing the next-generation speech synthesis engine, AITalk®5 (provisional name).

- On the other hand, this term is expected to keep seeing the trend of increased sales and income; however, the increase ratios of sales and income are in single digits, which leaves something to be desired as the company is expected to grow. We’d like to put our expectation on how the sales and profit will perform after the increased utilization of its original products and services.

1. Company Overview

AI, Inc. offers a speech synthesis engine and solutions regarding speech synthesis. “AITalk®,” which is a speech synthesis engine developed by the company, is offered to corporations for producing voices for automatic answering, car navigation, and anti-disaster wireless systems, and also as an audio communication system for smartphones, communication robots, in-vehicle devices, and automated call center operation. It also sells products targeted at consumers, including VOICEROID.

【1-1 Corporate history】

When the founder Daisuke Yoshida (representative director of AI, Inc.) was working for Advanced Telecommunications Research Institute International*, he encountered a speech synthesis technology, and had an intuition that it is a promising technology that would contribute to society. The technology was still immature, but he established AI, Inc. in April 2003, for the purpose of substantiating, diffusing, and commercializing that technology.

In 2007, the company started granting the license of the series of “AITalk®,” which is a speech synthesis engine developed by the company. Later, it developed a variety of products and services based on “AITalk®.” Its unique features, including “a wide array of speakers and languages” and “reduction of time and expenses with a small amount of voice samples,” were highly evaluated. Since it was adopted by the government for anti-disaster wireless communication, it has been adopted by many institutions and applied in a wider variety of cases.

In June 2018, the company was listed in Mothers of Tokyo Stock Exchange.

*Advanced Telecommunications Research Institute International (ATR)

It was established in 1986, under the concept of the preparatory meeting held by the then Posts and Telecommunications Ministry, NTT, Japan Business Federation, Kansai Economic Federation, universities, etc., with the mission to promote pioneering, unique research in the field of information and communications based on the international collaboration among government, industry and academia. 111 companies hold a stake in the company such as NTT and KDDI.

【1-2 Corporate mission, Vision】

On November 11, 2019, the company renewed its logo, corporate philosophy, and vision; it newly added a mission, value, and an action policy.

Corporate philosophy | AI will continue to create new value for society with its sound technology. |

Mission | We offer the “convenience” of creating sound and the “fun” of creating it. |

Vision | We will continue to create services that serve society with our sound technology. |

Value | Continue to be the top runner in sound technology.

1. Provide technology and services that make people happy. 2. Create the future together with both our employees and our customers. 3. Take steady steps forward every day. |

Action policy | ・For people who always seek to acquire a new skill and new technology ・Our customers, our employees, and ourselves. A considerate person who aspires to grow on his/her own and pulls the team along. ・An ambitious person who takes steady steps to grow. |

Amid the changes in internal or external business environments, the company has set forth the way they should be as its new corporate philosophy, corporate logo, and action policy, through which it aims to get recognized and become a company that provides value to society.

【1-3 Market environment】

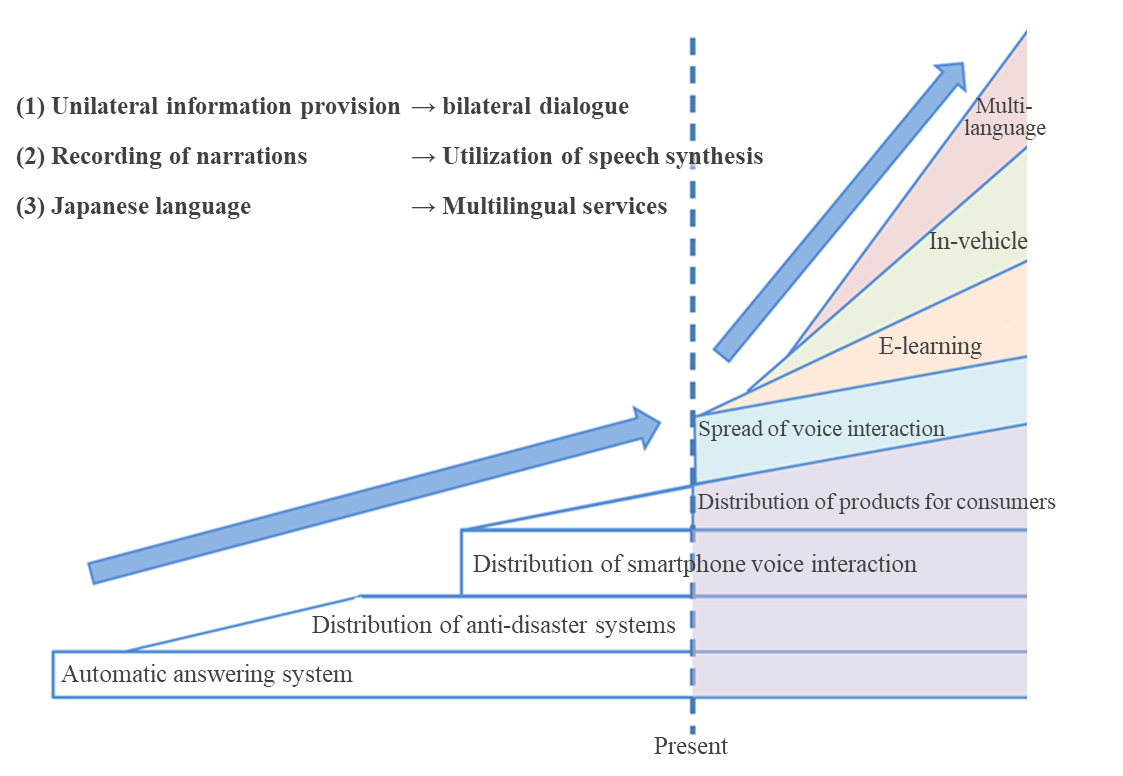

(1) Market environment

The development of the speech synthesis technology has a long history. However, the expansion of the scope of application was slow because the mainstream method has been to produce audio data mechanically although it has been adopted for automatic answering machines, anti-disaster announcement, voice interaction via smartphones, etc.

As the technology for producing sounds pronounced by human beings has advanced and artificial intelligence (AI) has evolved in recent years, we have seen the improvements in functions, including the shift from voice-over recording to “the utilization of speech synthesis,” the shift from unilateral provision of information to “the actualization of interactive communication,” and the shift from the Japanese language only to “multiple languages.” Going forward, the scope of application is expected to expand rapidly, and it will be used for e-learning, mobility, robots, AI speakers, etc.

A private research firm predicted that the scale of the global market of voice recognition and speech synthesis technologies will grow from about 47 billion dollars in 2011 to 200 billion dollars in 2025 (compound annual growth rate [CAGR]: about 10%).

(Taken from the reference material of the company)

(2) Competitors

Major competitors of “AITalk®,” a speech synthesis engine of AI, Inc., include HOYA Corporation (1st section of TSE, 7741, product name: Voice Text) and Toshiba Digital Solutions Corporation (unlisted, product name: ToSpeak).

Specializing in speech synthesis, AI, Inc. meets the requests from users swiftly and flexibly and secures its market share, by offering services of R&D, product development, sale, and support in an integrated manner.

【1-4 Business contents】

(1) What is the speech synthesis technology?

The voice technology can be roughly classified into the “voice recognition technology” for recognizing voices and translating them into characters, etc., and the “speech synthesis technology” for converting text information into audio data. AI, Inc. has been conducting the “speech synthesis” business, since it was established.

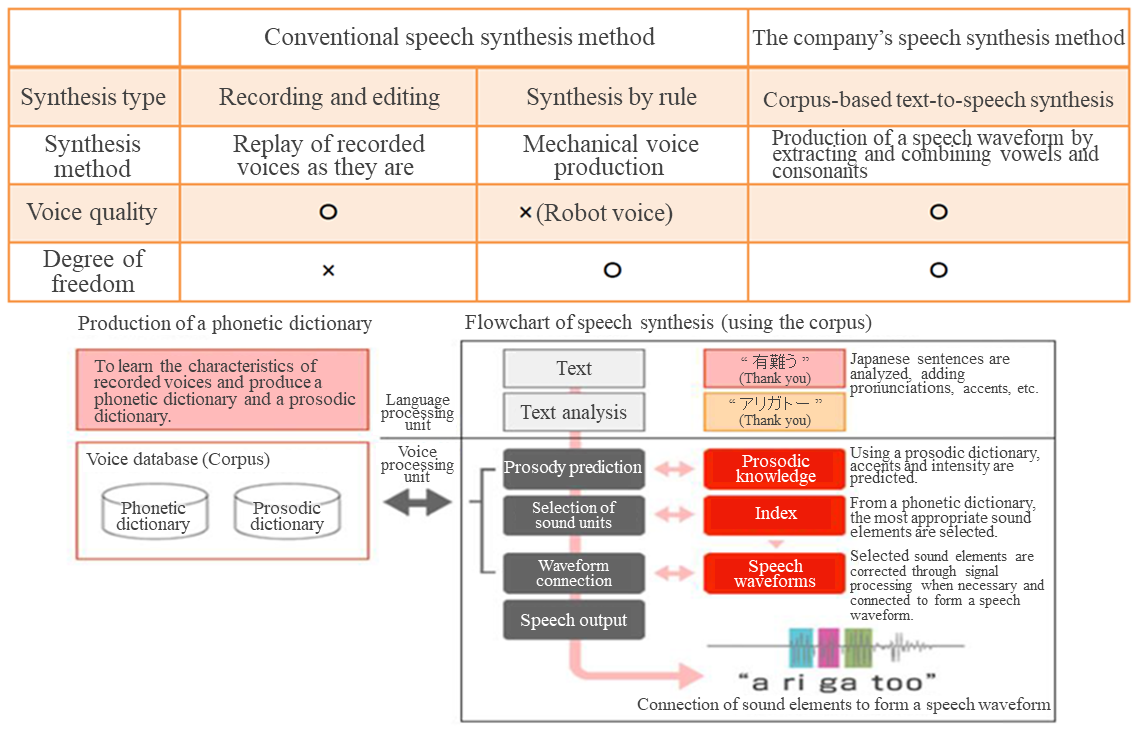

R&D in the speech synthesis field has a long history and dates back to around the 1850s. “Speech synthesis” reminds us of “mechanical sounds and robot voices” developed in around 1940, but AI, Inc. adopted the “corpus-based text-to-speech method.”

(Outline of the corpus-based text-to-speech method)

While the conventional “speech synthesis by rule” produces audio data mechanically, the “corpus-based text-to-speech method” produces a waveform by combining recorded human voices in units of vowels and consonants. Accordingly, sounds are derived from human voices rather than mechanical sounds.

The technology for “corpus-based text-to-speech synthesis” is constituted by the two technologies: “a technology for producing a phonetic dictionary” and “a speech synthesis technology for producing audio data from text information.”

Technology for producing a phonetic dictionary | This technology records the voices of a specific person, breaks down recorded voices into sound elements, that is, vowels and consonants, and produces a phonetic dictionary (a collection of sound elements) and a prosodic dictionary (prosodic information of recorded voices). The precision of the task of producing a phonetic dictionary is essential for enhancing the reproducibility of recorded human voices. |

Speech synthesis technology | This technology is composed of “a language processing unit,” which analyzes Japanese text and adds information on pronunciations and accents, and “a voice processing unit,” which predicts prosodic information with reference to the prosodic dictionary, selects the most appropriate sound elements from the phonetic dictionary, connects them to the sound waveform again, and outputs a speech. Both units require the precisions in the analysis of the Japanese language, prosody prediction, and the connection to sound waveforms. When these precisions are improved, it is possible to produce synthetic sounds that are very similar to recorded human voices, as the sound elements of recorded voices are recombined to output a speech. |

(Taken from the reference material of the company)

(2) “AITalk®,” a high-quality Japanese speech synthesis engine

“AITalk®” is a high-quality speech synthesis engine researched and developed by the company based on the “corpus-based text-to-speech synthesis technology,” which produces sounds based on human voices.

The following section will describe the features of “AITalk®,” which can synthesize speeches freely with more human-like and natural voices, major application cases, and outlines of products based on “AITalk®.”

① Characteristics of “AITalk®”

*A diverse lineup of speakers and languages

Currently, Japanese speakers of this system range from adults to kids, and speak 17 kinds of male or female languages (15 kinds of standard languages and 2 kinds of Kansai dialects). From this diverse lineup of voices, customers can choose appropriate ones for various scenes.

*Please try the “demonstration of speech synthesis” in the company’s website at https://www.ai-j.jp/demonstration/.

(Taken from the website of the company)

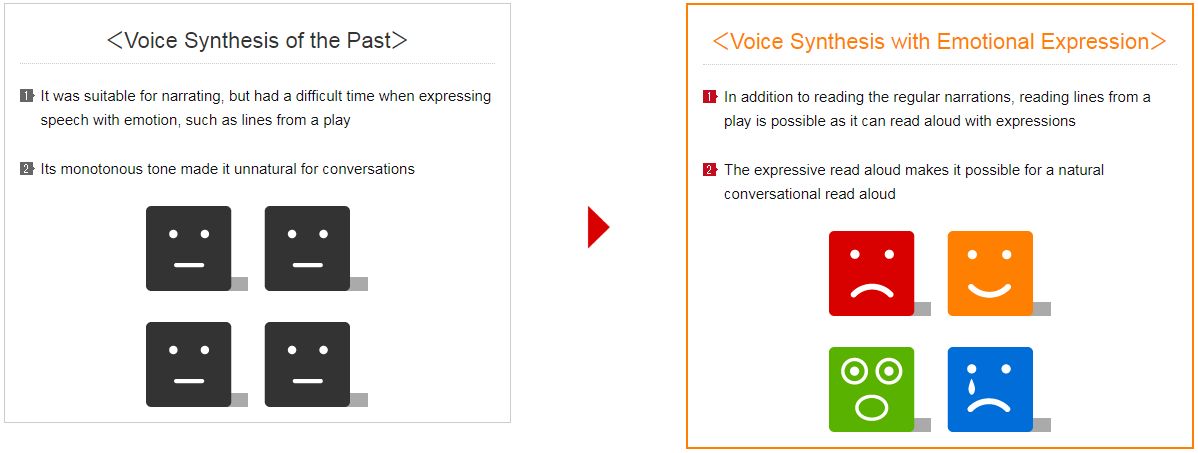

*It is also possible to express emotions.

It is possible to express emotions, including delight, anger, sorrow and pleasure, according to situations and purposes of use.

(Taken from the website of the company)

*Anyone’s voice can be converted into synthetic data.

The voices of entertainers, voice actors, and users recorded for a short period of time can be converted into data for speech synthesis.

Since it is possible to easily produce speeches of real people just by inputting text, it is possible to offer a variety of contents, including online campaigns, smartphone applications, and games.

② Customer segments and major application cases

As the “corpus-based text-to-speech synthesis technology” has advanced, the speech synthesis engine has been adopted in various scenes where recorded voices of voice actors and narrators had been used.

AI, Inc. has a broad range of client enterprises in the fields of communications, disaster prevention, finance, railways, transportation, in-vehicle devices, games, sightseeing, municipalities, and libraries. Over 500 companies adopted the system, and we heard that the number of clients is increasing by 20-30% every term.

As IoT and robots have been popularized and the number of sightseers visiting Japan has increased over the past several years, there are an increasing number of cases in which the system is used as a dialogue solution combining voice recognition and the interpretation of intentions or a speech translation solution combining translation and multilingual speech synthesis. The company expects that the speech synthesis technology will be used for interactive dialogue as part of artificial intelligence, indicating the evolution from the conventional unilateral information provision.

Application case | Outline |

(1) Anti-disaster wireless communication | Many municipalities use the system for producing audio announcements to citizens in anti-disaster wireless communication and the national early warning system (J-ALERT). |

(2) Smartphone voice interaction | The voice interaction apps for smartphones, such as “Shabette Chara®,” which is provided by NTT Docomo, Inc., and “Yahoo! Audio Assist,” which is provided by Yahoo Japan Corporation, are increasingly used. |

(3) Road traffic information and car navigation | The system is utilized for road traffic information, which offers real-time road traffic information, such as “road traffic information” of Japan Road Traffic Information Center and car navigation, which guides an enormous number of place-names throughout Japan, such as “Docomo Drive Net Info” of NTT Docomo. |

(4) E-learning | Lightworks (CAREERSHIP®), Tokyo Customs, Chugai Pharmaceutical, Taiho Pharmaceutical, etc. use the system. |

(5) Broadcasting | The system is used by TBS (IRASUTO Virtual Caster), TV Tokyo (Morning Satellite), BS JAPAN (Nikkei Morning Plus), etc. |

(6) Communication Robot | The system is used in many robots such as “Pepper” by SoftBank Robotics Corp and Matsukoroid by Matsukoroid Production Committee. |

(7) Public-address in buildings and stations | The system is utilized for announcing information at stations, airports, commercial facilities, such as JR Kyoto Station and Memanbetsu Airport Bldg. |

(8) Automatic answering system | The system is used for notifying library users of the dates when a library is closed by telephone, answering customers’ calls at banks, and attending to customers at call centers. It is applied broadly to automatic answering systems, including telephone banking. |

(9) Reading of websites | The system is utilized as a tool for giving information of websites of municipalities and enterprises throughout Japan with synthesized voices. |

(10) Production of audio files | The system is utilized as a tool for producing audio files used for narrations of e-learning content, guidance about equipment, such as ticket dispensers, and so on. |

(11) Video games | The system is utilized for voice-overs of video games, such as the series of “StarHorse,” an arcade horse racing game provided by SEGA Interactive Co., Ltd., and “Kuma-Tomo (Teddy Together)” of BANDAI NAMCO Entertainment Inc. |

(12) Packaged products for consumers (Package for reading contents aloud) | The system is utilized for producing audio files for packaged products for consumers, including the “VOICEROID®” series offered by AHS Co., Ltd. |

Matsukoroid

| This is an android entertainer developed by making a cast of the entire body, including the head and toes, accurately mimicking facial expressions, behavior, habits, etc., and applying the cutting-edge android technology, with the aim of producing an android that is like two peas in a pod with Matsuko Deluxe. It was born under the supervision of Professor Hiroshi Ishiguro of Osaka University, who is a pioneer in android research.

AITalk®, a speech synthesis engine of AI, Inc., was adopted for producing some voices of “Matsukoroid.” AI, Inc. recorded the actual voices of Matsuko Deluxe in a short period of time, and produced “AITalk® CustomVoice®,” an original phonetic dictionary for speech synthesis. This enabled Matsukoroid to read a variety of texts aloud with the voices of Mastuko Deluxe. Going forward, Matsukoroid will speak with AITalk®, which synthesizes speeches with the voices of Matsuko Deluxe, at events, etc.

(Taken from the website of the company) |

③ Major products

Based on AITalk®, AI, Inc. develops and sells products and services suited for various scenes of corporations and individuals.

Product name | Outline | Application cases |

AITalk® Koe-no-shokunin (Voice Craftsman)

| Software for producing narrations, with which you can produce audio files easily just by inputting text into your PC. Anyone can produce high-quality narrations with easy, intuitive procedures. The latest version “AITalk® 4” can adjust emotions. | Narrated video manuals for e-learning, sightseeing guides, public-address announcements, etc.

|

AITalk® Koe Plus (Voice Plus) | Add-in software for PowerPoint®, which can add voices to the slides of PowerPoint® easily. You can easily produce high-quality voices in PowerPoint® files. | Production of narrated e-learning content with PowerPoint® only, addition of voices to presentation material for use inside and outside your company, etc. |

AITalk® SDK | This software development kit (SDK) can synthesize speeches freely from human-like, natural voices and offer them via libraries. The latest version “AITalk® 4 SDK” can adjust emotions. | To integrate into package software / voice of automatic telephone answering system / integration into devices/ WEB campaign and WEB service |

AITalk® Server | This engine is suited for cases where a network is used and synthesis is conducted with multitasking, such as automatic answering and online services. | Voice for automatic telephone response / WEB campaign, WEB service |

AITalk® Custom Voice® | This is a service of recording the voices, etc. of entertainers, voice actors, and customers and producing an original Japanese phonetic dictionary for speech synthesis. Just by inputting text, it is possible to produce speeches with real voices. | It can be applied to a variety of content, including online campaigns, smartphone apps, and video games.

|

Kantan (Easy)! AITalk® | Packaged software for individual users, with which you can produce high-quality narrations just by inputting text. | Inputting your own voices for narrations of videos, production of original audio teaching material which can be used in trains and vehicles for listening. |

AITalk® Anata-no-koe (Your Voice) | Your voice, etc. can be reproduced with the speech synthesis technology. With your PC and this packaged software, including Custom Voice®, you can produce speeches in various words anywhere, anytime. | It is possible to read a closing address of a funeral with the voice of the deceased. You can give lectures and presentations without speaking, by synthesizing speeches with your voice. |

(3) The next-generation speech synthesis engine, AITalk®5 (provisional name).

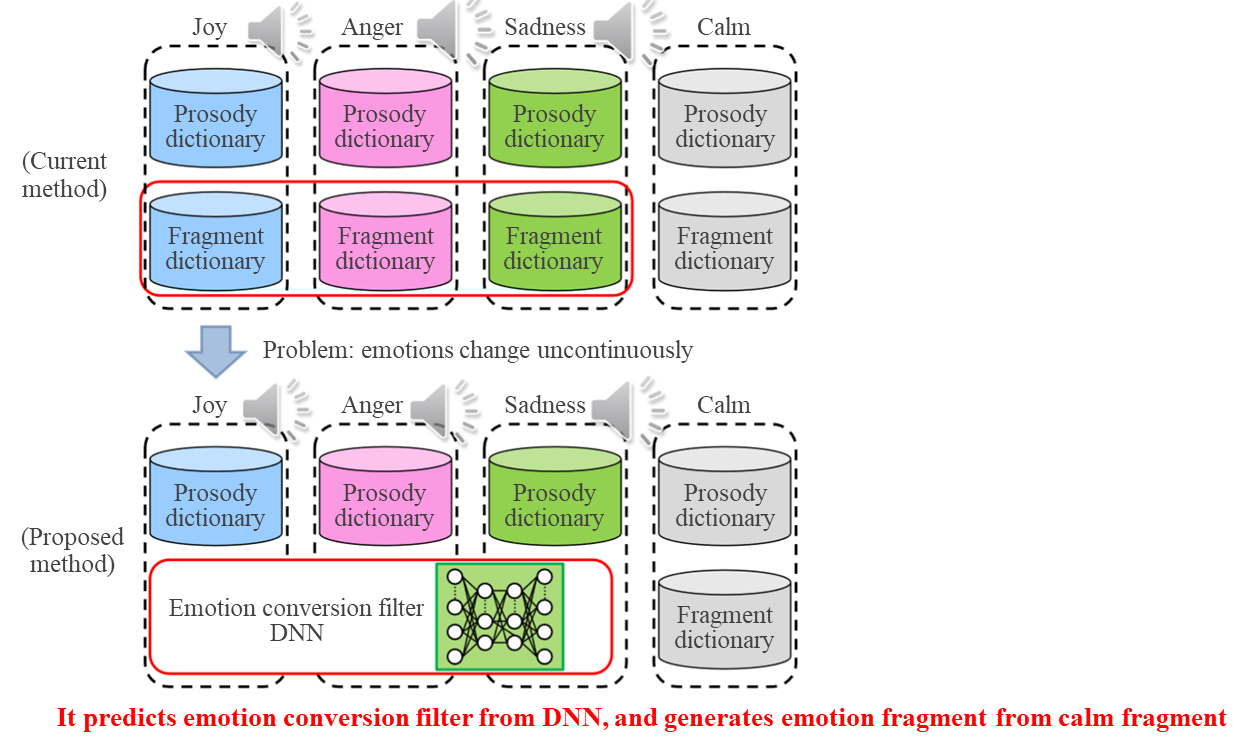

AI is working on commercializing the next-generation speech synthesis engine, AITalk®5 (provisional name), which utilizes the Deep Neural Network (DNN) to express emotions with the speech synthesis engine.

(Background for development)

For the company’s current AITalk®4, which is a corpus-based speech synthesis engine, it is necessary to create a separate emotion sound dictionary for emotions, such as happiness, sadness, and anger, needed to create interactive sound synthesis. This has problems like the large cost and that the change in the emotion of the synthesized speech was random and not smooth.

Therefore, the company has been working on “subsidies for developing new products and technologies project” for 18 months from July 2017 to December 2018 in order to successfully transition from a calm state to an emotional state smoothly, by predicting emotion change filters from DNN and producing emotion elements from normal elements; they were confident enough to commercialize it. The company is currently applying for a parent on that system.

(Overview of the next-generation speech synthesis engine, AITalk®5 (provisional name))

(1) Characteristics

According to the usage scene, it offers the option of a conventional corpus-based speech synthesis system or the DNN speech synthesis system and has the following characteristics:

1 The system can synthesize a more natural and human-like high-quality sound thanks to the sound improvement achieved by using deep learning. Moreover, it eliminates the problem of jarring transition between emotions associated with AITalk®4 and now can synthesize an emotion-rich voice that transitions smoothly between happiness, sadness, and anger.

2 The conventional AITalk®4 required separate emotion sound dictionaries for each emotion and entailed recording separate sounds assigned to each emotion happiness, anger, and sadness. Comparatively, the next-generation speech synthesis engine, AITalk®5 (provisional name) utilizes Deep Learning to create sound dictionaries from a much shorter recording than usual. Therefore, shortening the time of recording and creating sound dictionaries lead to reducing the costs of creating sound dictionaries and allowed the company to offer the speech synthesis engine at a much lower price.

(2) Products lineup

AITalk®5 SDK: development kit/library

AITalk®5 Custom Voice®: Original sound dictionary creation service

AITalk®5 Edito A narration/guidance sound creation software

AITalk®5 Serve server-based speech synthesis

(3) Release date

Planned in April 2020: SDK/Custom Voice®/Editor

Planned in October 2020: Server

(Taken from the website of the company)

(4) Business model and commercial distribution

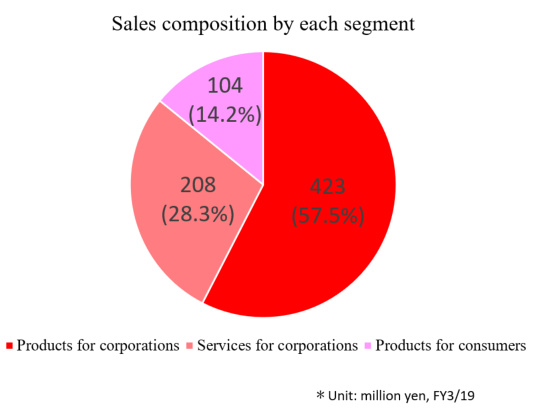

The company’s products and services are classified into “products for corporations,” “services for corporations,” and “products for consumers.”

To corporations, AI, Inc. offers the most appropriate products or cloud services according to the characteristics of each client.

As for marketing targeted at corporations, the company owns “Inside sales” staff, who deal with inquiries through sales promotion (SEO, email newsletters, news releases, etc.), and “Field sales” staff, who strive to increase new customers and orders from existing customers, and sales partners sell packaged software.

As for marketing targeted at consumers, the company does not sell its products directly to customers, but entrusts distributors with sale, and receives royalties from them on a quarterly basis.

① Products for corporations

AI, Inc. sells packaged software, grants licenses, and carries out entrusted development.

◎Sale of packaged software

The company sells packaged software with which you can easily produce audio files just by inputting text into your PC.

Through easy, intuitive operation, it is possible to produce high-quality voice-overs.

Major products and services | Business model | Fee example |

AITalk® Koe-no-shokunin (Voice Craftsman)® AITalk® Koe Plus (Voice Plus) | One-shot revenue type | 900,000 yen for eternal use |

◎Licensing

This is a major business model of AI, Inc. The company concludes a licensing contract for use with each client, and receives some fees for the use of the speech synthesis engine.

The company individually set the basic license fee, monthly fees for use, royalties, which depend on sales results, and so on. The company offers the most appropriate speech synthesis engine according to the purposes of use.

Major products and services | Business model | Fee example |

AITalk® SDK AITalk® Server micro AITalk® | Recurring-revenue type | Basic license fee + Royalties (set individually) |

◎Entrusted development

AI, Inc. is entrusted by clients with the development of original phonetic dictionaries for respective clients.

Major products and services | Business model | Fee example |

AITalk® Custom Voice®

| One-shot revenue type | 400,000 to 5,000,000 yen according to plans |

② Services for corporations

◎Cloud serviceThe company offers speech synthesis services utilizing the cloud environment. Users can use services utilizing speech synthesis via the Internet.

Major products and services | Business model | Fee example |

AITalk® WebAPI AITalk® Web-yomi Shokunin (Website Reading Expert)® AITalk® Koe-no-shokunin (Voice Craftsman)® Cloud Version | Recurring-revenue type | 5,000 yen/month |

◎ Support services

The company provides clients of products for corporations with continuous technical support.

Major products and services | Business model | Fee example |

Technical support | Recurring-revenue type | Annual contract |

③ Products for consumers

The company sells packaged software, with which you can easily produce audio files.

Major products and services | Business model | Fee example |

Kantan (Easy)! AITalk® AITalk® Anata-no-koe (Your Voice)® VOICEROID® Series-Kotoha, Akane® and Aoi® | One-shot revenue type | The company outsources sales, and sets royalties according to sales performance. |

(5) R&D structure

As of March 31, 2019, the number of R&D staff members was 10. The total R&D cost for the term ended March 2019 was 101 million yen.

Until the term ended March 2018, the “language processing” and “voice processing” groups had engaged in R&D, and in the previous term ended March 2019, the “engine development” group joined them, and they conducted the R&D activities described below.

(Language processing group)

It aims to improve the Japanese language processing technology for speech synthesis.

(1) It tried to improve the precision of morphological analysis by upgrading the dictionary development tool of MeCab and the learning tool and adding the dictionary entry.

(2) The company upgraded MeCab and speeded up its operation for speech synthesis, in order to handle tagged text and compress resources for embedded systems.

(3) The company developed a technology for producing a scalable dictionary that can be adjusted according to the purposes of use, while considering the application to various fields, including embedding.

(Voice processing group)

The company is proceeding with the development of a new high-quality speech synthesis engine.

(1) One of the problems with speech synthesis using the deep neural network (DNN) is the deterioration of sound quality when unlearned information is inputted. The company developed a new normalization method, and confirmed that sound quality could be improved.

(2) For the estimation of acoustic parameters using DNN, the company developed a new prosodic model, and confirmed the improvement in quality of prosody prediction. This method is expected to improve quality also for spectrum parameters.

(3) In the collaborative research with Nagoya University, the company completed the fundamental study of application to the speech synthesis engine of a neural vocoder, which is one of next-generation DNN voice technologies.

(Engine development group)

The company is proceeding with the early practical application of new algorithms for languages and voices they have researched and developed.

(1) The company developed a new engine equipped with the DNN voice quality change technology, and checked its performance.

(2) The company upgraded the API of the speech synthesis engine and added new functions, in order to enable its integration into the multilingual speech synthesis engine of Nuance Communications, Inc. as the Japanese engine.

【1-5 Characteristics, strengths, and competitive advantage】

AI, Inc., which developed AITalk®, a high-quality speech synthesis engine, and offers products and services, has the following characteristics, strengths, and competitive advantage.

(1) The required number of voice samples is small.

The general approach for improving speech synthesis quality in the “corpus-based text-to-speech synthesis” is to increase voice samples. However, it has a disadvantage; if voice samples increase, then recording time is prolonged and the size of a phonetic dictionary increases, augmenting the cost for producing the phonetic dictionary.

AI, Inc. is proceeding with R&D, with the aim of synthesizing high-quality speeches with a small number of voice samples. In general, it is necessary to record voices for several tens of hours (several to ten thousand sentences), but the company can produce a phonetic dictionary with 2 to 6 hours of recording (200 to 600 sentences).

(2) Provision of a variety of speakers

Since a phonetic dictionary can be produced with a small number of voice samples, it is possible to offer a wide array of phonetic dictionaries. At present, the company offers a total of 15 speakers, including 7 female speakers, 4 male speakers, 2 boyish speakers, and 2 girlish speakers.

(3) Experience of producing a large number of custom voices

The production of a phonetic dictionary used to cost tens of millions of yen, but the company developed a technology for producing it with a small number of voice samples at a cost of 0.5 to 5 million yen. As a result, it is now possible to inexpensively produce a phonetic dictionary desired by each user, including the voices of specific voice actors, narrators, and characters, and the scope of application of the speech synthesis engine has expanded.

Up until now, the company has produced over 300 custom voices.

(4) System for offering services of R&D, product development, sale, and support in an integrated manner

Most competitors that offer speech synthesis engines are large makers, in which R&D and product development/sale sections are separated.

Meanwhile, AI, Inc. deals with almost all processes including R&D, product development, sale, and support, by itself, so that it can operate business flexibly and swiftly. For the speech synthesis engines for foreign languages, it collaborates with overseas makers.

【1-6 ROE analysis】

| FY 3/16 | FY 3/17 | FY 3/18 | FY 3/19 |

ROE (%) | 15.0 | 15.4 | 17.8 | 16.7 |

Net income margin [%] | 15.09 | 17.03 | 18.51 | 20.38 |

Total asset turnover [times] | 0.84 | 0.77 | 0.83 | 0.73 |

Leverage [times] | 1.19 | 1.17 | 1.16 | 1.12 |

Because the company got listed in June 2018, the procured funds are reflected in the value as of the end of March 2019 only, and it is difficult to compare the values in terms. However, the net income margin for the term ending March 2020 is estimated to be 20%, and it is expected that ROE will remain high this term.

【1-7 ESG activities】

In the term ended March 2019, AI, Inc. carried out the following activities.

ESG | Theme | Outline |

S: society | (1) Empowerment of women | ・Among 36 employees, 16 (45.7%) are female ones. ・Among 11 managers, 3 (27.3%) are female ones. |

(2) Promotion of child-care support | ・A child-care leave was taken by 2 employees. ・The system for shortened working hours was used by 1 employee. | |

(3) Promotion of the reform of ways of working | ・Working environment where the overtime work amount is small. Average overtime hours: 16 hours/month ・Working environment where employees feel free to take a day off: the average number of paid holidays taken: 12 (26 at a maximum) ・Promotion from part-time workers to full-time employees: In April 2019, two part-time workers were promoted to employees. | |

(4) Promotion of social contribution activities | ・Accepted the tour of students of a middle school for social studies ・To accept the tour of students of 4 schools in the term ending March 2020. | |

G: governance | (1) Dialogue with shareholders and investors | ・A briefing session for individual investors held once (August). ・A briefing session for institutional investors held once (November). ・A 1-on-1 meeting with an institutional investor held 29 times. ・Interviewed by magazine reporters and others 18 times. ・Appeared in a TV or radio program 5 times. |

2. The Second Quarter of March 2020 Earning Results

(1) Earnings Results

| 2Q FY 3/19 | Ratio to net sales | 2Q FY 3/20 | Ratio to net sales | YOY | Ratio to the estimates |

Net sales | 294 | 100.0% | 311 | 100.0% | +5.6% | +0.5% |

Gross profit | 235 | 79.8% | 248 | 79.9% | +5.7% | +9.4% |

SG&A expenses | 165 | 56.2% | 185 | 59.5% | +11.7% | -9.1% |

Operating income | 69 | 23.5% | 63 | 20.4% | -8.6% | +168.1% |

Ordinary income | 57 | 19.4% | 63 | 20.4% | +11.1% | +167.9% |

Net income | 42 | 14.6% | 49 | 15.8% | +14.4% | +199.2% |

*Unit: Million yen.

Sales grew, but operating income declined due to the increase of employees, etc. The results exceeded the initial estimates.

Sales were 311 million yen, up 5.6% year-on-year. The sales of products and services provided to corporations grew while the sales of products to consumers declined.

SG&A expenses increased 11.7% year-on-year due to the bolstering of workforce, resulting in a fall in operating income by 8.6% year on year to 63 million yen. Ordinary income increased 11.1% year-on-year to 63 million yen after the elimination of temporary expenses attributable to the listing in the stock market.

SG&A expenses were less than the estimate, thanks to postponing recruitment as well as cutting recruitment costs, thus the profit was substantially higher than the estimate.

From April 2019 to the end of October, the company hired 2 employees in marketing, 5 in product development, and 1 in administration. The company’s recruitment plan was completed for this term, but they are open to hiring more if talents are available.

(2) Sales in each segment

| 2Q FY 3/19 | Composition ratio | 2Q FY 3/20 | Composition ratio | YOY |

Products for corporations | 147 | 47.4% | 155 | 50.0% | +5.5% |

Services for corporations | 94 | 30.3% | 111 | 36.0% | +18.6% |

Products for consumers | 52 | 17.0% | 43 | 14.0% | -17.3% |

Total | 294 | 100.0% | 311 | 100.0% | +5.6% |

*Unit: Million yen.

Products for corporations

Sales grew in the anti-disaster field while sales of packaged products (Voice Craftsman and Voice Plus) performed well.

Services for corporations

“my daiz®” service of NTT Docomo contributed significantly. (my daiz® gives the most appropriate proposal to each user through the dialogue with the characters of my daiz and agents of each service.)

Products for consumers

The sales of the VOICEROID series were sluggish due to the delay in releasing new products.

(3) Financial Conditions and Cash Flow

◎Major BS

| End of March 2019 | End of September 2019 |

| End of March 2018 | End of September 2019 |

Current assets | 1,115 | 1,089 | Current liabilities | 105 | 61 |

Cash and deposits | 970 | 983 | Trade payables | 3 | 2 |

Trade receivables | 130 | 86 | Other payables | 35 | 18 |

Noncurrent assets | 96 | 97 | Noncurrent liabilities | 2 | 2 |

Property, plant and equipment | 13 | 16 | Total liabilities | 108 | 64 |

Intangible assets | 15 | 12 | Net assets | 1,103 | 1,122 |

Investments and other assets | 67 | 68 | Retained earnings | 761 | 770 |

Total assets | 1,211 | 1,186 | Total liabilities and net assets | 1,211 | 1,186 |

*Unit: Million yen |

|

| Equity ratio | 91.1% | 94.6% |

Equity ratio rose 3.5% year on year to 94.6%.

◎Cash flow

| 2Q FY3/19 | 2Q FY3/20 | Increase/decrease |

Operating CF | 45 | 50 | +5 |

Investing CF | -1 | -6 | -5 |

Free CF | 44 | 44 | -0 |

Financing CF | 246 | -30 | -277 |

Cash and cash equivalents | 927 | 983 | +56 |

*Unit: Million yen.

The surplus of financing CF increased due to the issuance of shares.

The cash position improved.

(4) Topics

◎ Conclusion of a license agreement with Cerence Inc. for commercializing a multilingual speech synthesis engine for automobiles

In November 2019, Cerence Inc. signed a license agreement with AI, Inc. to use the basic technology of its high-quality Japanese speech synthesis engine, AITalk®.

(Overview of Cerence Inc.)

The automotive department of Nuance Communications Inc., which had signed a technical cooperation agreement with the company, became independent and was newly established in October 2018. It provides its solutions for the automotive industry.

They have specialized expertise in the fields of AI, NLU (Natural Language Understanding), voice-print verification, gestures and line of sight recognition, AR (Augmented reality), etc.; they are the innovative partners of major automotive manufacturers. Additionally, they are expanding business in the fields of connected cars, automated driving, electric vehicles, etc.

Cerence Inc.'s "Cerence TTS" speech engine features rich expression, enhanced multilingual support, and optimized reading of long texts with next-generation speech synthesis technology for high quality speech output.

Also, it provides not only a smooth blend of static and dynamic speech output, but also it improves the quality and accuracy of the speech output with optimized text processing, a more comprehensive phonetic dictionary, and a full speech refresh in many languages.

(Details and background of the license contract)

AI, Inc. will provide the basic technology of its high-quality Japanese speech synthesis engine, AITalk®, to Cerence Inc. and Cerence Inc. embed it in “Cerence TTS.” As a result, users of “Cerence TTS” will be able to use the high-quality Japanese speech synthesis engine, AITalk® as one of its multilingual options. In case “Cerence TTS” is adopted by automobile manufacturers and other businesses, license fees according to the number of in-vehicle devices, etc. will be paid to AI, Inc.

This license agreement is a transfer of the technical cooperation agreement with Nuance Communications Inc. to Cerence Inc., which is a reaffirmation of Cerence’s appreciation of AITalk®.

◎Commercialize of a next-generation speech synthesis engine

In September 2019, AI announced the commercializing of the next-generation speech synthesis engine, AITalk®5 (provisional name), which utilizes the Deep Neural Network (DNN) to recreate emotion with the speech synthesis engine.

(Refer to “1-4 Business details”)

The next-generation speech synthesis engine, AITalk®5 (provisional name), offers the option of a conventional corpus-based speech synthesis system or the DNN speech synthesis system according to the usage scene.

The system can synthesize a more natural and human-like high-quality sound thanks to the sound improvement achieved by using deep learning; moreover, shortening the time of recording and creating sound dictionaries lead to reducing costs of creating sound dictionaries and allows the company to offer the speech synthesis engine at a much lower price.

◎ Multilingual applications for the disaster prevention radio system

As foreigners coming to Japan increase, the need for implementing multilingual support in the disaster prevention radio system is increasing. The company can supplement the Japanese language broadcasting by AITalk® with foreign languages (English, Chinese, Korean, Spanish, Portuguese, etc.).

AI used to broadcast Japanese speech generated by AITalk® based on Japanese text, however, in the future, the company will additionally translate the Japanese text to foreign languages and generate foreign language broadcasts using the foreign language speech synthesis engine.

The translation engine and the foreign language speech synthesis engine will enforce cooperation with other companies.

◎ Presentations in conferences and publishing academic papers

The company presented their research results in the Music Symposium (June 2019, Kyoto), IEICE (The Institute of Electronics, Information and Communication Engineers) Japanese edition (D) (July 2019), and the 10th ISCA Speech synthesis workshop, Satellite Workshop at Interspeech 2019 (September 2019), etc.

Interspeech is a major international conference in the field of sound research.

3. Fiscal Year March 2020 Earnings Estimates

(1) Earnings Estimates

| FY 3/19 | Ratio to net sales | FY 3/20 (Est.) | Ratio to net sales | YOY | Progress ratio |

Net sales | 737 | 100.0% | 800 | 100.0% | +8.5% | 38.9% |

Operating income | 211 | 28.6% | 220 | 27.5% | +4.2% | 28.8% |

Ordinary income | 202 | 27.4% | 220 | 27.5% | +8.8% | 28.8% |

Net income | 150 | 20.4% | 160 | 20.0% | +6.5% | 30.7% |

*Unit: Million yen. The estimates were announced by the company.

The earnings forecast is unchanged. Sales and profit estimated to grow.

It is projected that sales will increase 8.5% year on year to 800 million yen and operating income will rise 4.2% year on year to 220 million yen.

Due to the expansion of the speech synthesis market, sales and profit are forecasted to increase like in the previous term.

The dividend is estimated at 6 yen per share, up 1 yen per share from the ordinary dividend of 5 yen per share in the previous term. The estimated payout ratio is 18.3%.

Incidentally, the breakdown of the 8 yen per share paid in the term ended March 2019 is 5 yen per share as an ordinary dividend and a 3 yen per share as a commemorative dividend.

(2) Sales in each segment

| FY 3/19 | Composition ratio | FY 3/20 (Est.) | Composition ratio | YOY | Progress ratio |

Products for corporations | 423 | 57.5% | 451 | 56.4% | +6.4% | 34.4% |

Services for corporations | 208 | 28.3% | 229 | 28.6% | +9.7% | 48.5% |

Products for consumers | 104 | 14.2% | 120 | 15.0% | +14.9% | 35.8% |

Total | 737 | 100.0% | 800 | 100.0% | +8.5% | 38.9% |

*Unit: Million yen.

(Products and services for corporations)

Through the market expansion, the number of inquiries is expected to increase further, and it is estimated that the sales of packaged products (Koe-no-shokunin and Koe Plus), which are used instead of voice recording, will increase and the multilingual versions with the translation function will increase in the anti-disaster field.

(Products for consumers)

It is estimated that the sales of the VOICEROID series for producing narrations of videos, etc. will be healthy.

The company will also release new products.

4. Conclusions

We were worried about the delay in releasing the company’s original products for Nuance Communications, which was scheduled in June 2019, however, the Nuance Communications’ automotive department became independent and was newly established as Cerence Inc., to which the agreement was transferred. The very existence of automobiles is expected to change along with concepts such as C.A.S.E., or Connectivity, Autonomous, Sharing/Subscription, and Electrification; it is also expected that the functionality of speech synthesis engines will not stop at the conventional car navigation and demand for it will rapidly increase. We are paying attention to the company’s business expansion in the medium to long term as they started commercializing the next-generation speech synthesis engine, AITalk®5 (provisional name).

On the other hand, this term is expected to keep seeing the trend of increased sales and income; however, the increase ratios of sales and income are in single digits, which leaves something to be desired as the company is expected to grow. We’d like to put our expectation on how the sales and profit will perform after the increased utilization of its original products and services.

<Reference: Regarding Corporate Governance>

◎Organization type and the composition of directors

Organization type | Company with audit and supervisory committee |

Directors | 5 directors, including 3 outside ones |

◎Corporate Governance Report

Last update date: June 27, 2019

<Basic policy>

Recognizing that in order for an enterprise to grow and develop stably, it is indispensable to enhance the efficiency and soundness of business administration and establish a fair, transparent management system, the company considers thoroughgoing corporate governance as the most important mission.

<Reasons for Non-compliance with the Principles of the Corporate Governance Code (Excerpts)>

Our company follows all of the basic principles of the Corporate Governance Code.

This report is intended solely for information purposes, and is not intended as a solicitation to invest in the shares of this company. The information and opinions contained within this report are based on data made publicly available by the Company, and comes from sources that we judge to be reliable. However, we cannot guarantee the accuracy or completeness of the data. This report is not a guarantee of the accuracy, completeness or validity of said information and or opinions, nor do we bear any responsibility for the same. All rights pertaining to this report belong to Investment Bridge Co., Ltd., which may change the contents thereof at any time without prior notice. All investment decisions are the responsibility of the individual and should be made only after proper consideration. Copyright (C) 2019 Investment Bridge Co., Ltd. All Rights Reserved. |